As I covered previously in “Introduction to Neural Networks,” artificial neural networks (ANN) are simplified representations of biological neural networks in which the basic computational unit known as an artificial neuron, or node, represents its biological counterpart, the neuron. In order to understand how neural networks can be taught to identify and classify, it is first necessary to explore the characteristics and functionality of the basic building block itself, the node.

The Node

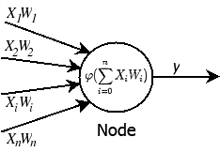

For a given node, there are n inputs labeled ![]() . Each input also has a weight associated with it:

. Each input also has a weight associated with it: ![]() . Again, these inputs represent biological synapses connected to the dendrites of the neuron. The activation level of the node, a, is determined by calculating the sum of each input multiplied by it’s weight,

. Again, these inputs represent biological synapses connected to the dendrites of the neuron. The activation level of the node, a, is determined by calculating the sum of each input multiplied by it’s weight, ![]() .

.

So,

![]()

or,

![]()

This activation value is passed through a function known as the activation or transfer function, ![]() , to determine the output of the node, y.

, to determine the output of the node, y.

![]()

Substituting for a gives us:

![Rendered by QuickLaTeX.com \[y = \phi\left(\sum_{i=0}^{n}X_iW_i\right)\]](https://neuraldump.net/wp-content/ql-cache/quicklatex.com-5a8e2799e2f705ab2d1bdf12d059a8da_l3.png)

Transfer Functions

The transfer function can either be linear or non-linear. Linear functions are seldom chosen for multi-layer neural networks since a multi-layer network made up of nodes with linear transfer functions has an equivalent single-layer network. In order to realize the advantages of a multi-layer network, non-linear transfer functions must be used.

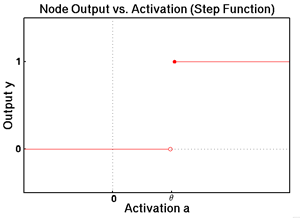

The simplest transfer function is a discontinuous, step function also known as threshold function. The output is binary, usually a 1 or a 0. The outputs may also be -1 and 1 as long as the threshold value falls between the lower and upper value. If a node has a threshold value, θ, then when a >= θ, y = 1, otherwise y = 0.

![Rendered by QuickLaTeX.com \[y = \begin{cases} 1, & \text{if } a \geq \theta, \\ 0, & \text{otherwise}. \end{cases}\]](https://neuraldump.net/wp-content/ql-cache/quicklatex.com-489e5b4a451f999d0cec47f70f21353f_l3.png)

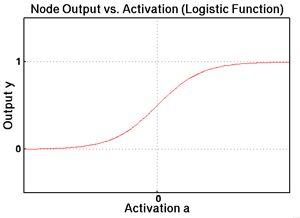

Non-linear transfer functions are almost always sigmoid functions. Sigmoid functions are a group of functions so named because of their “S” shape. The properties of a sigmoid function include:

- is real-valued and differentiable

- has a non-negative or non-positive first derivative

- has one local minimum and one local maximum

All smooth, positive, bump-shaped functions have a sigmoidal integral so there are many functions to choose from, but the most common sigmoid transfer function is a special case of the logistic function:

![]()

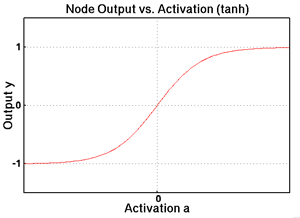

If an output range of -1 to 1 is desired instead, the hyperbolic tangent is often used:

![]()

McCulloch-Pitts Neurons

The first artificial neurons were proposed by Warren McCulloch and Walter Pitts in 1943. Their neuron’s primary function is as a Threshold Logic Unit (TLU), a computational unit that takes as it’s input an array of values and a corresponding array of weights for those values, sums them, compares that sum to a threshold value, and if that sum exceeds the threshold, outputs a value. In other words, a TLU is a single node with 2 or more inputs that uses a step function as it’s transfer function. These are also known as McCulloch-Pitts (MP) neurons, in deference to their creators, or threshold neurons.

Even a single MP neuron can classify data. Imagine a node with two inputs both with a weight of 1. The threshold value, θ, is 1.5. For the inputs <0,0>, <0,1>, <1,0>, and <1,1> the respective outputs are: 0, 0, 0, and 1. Since the output is binary, the node is capable of identifying exactly two groups: in this case its the group where both inputs are 1s, designated by an output value of 1, and the group where at least one value is not a 1 (either two 0s or a 1 and 0), designated by an output of 0. This single TLU “knows” the Boolean AND function.

MP neurons have been generalized and modified to serve as the building block of artificial neural networks. The most frequent modification is to use the sigmoid function as the transfer function instead of the original threshold operation.

In the next article, we will begin to put everything together. Although a single MP neuron is actually capable of classification as illustrated above, it is only when nodes are connected into single- and multi-layer networks does the true power of this AI method become apparent.